Some students may be curious about the technical architecture inside the DSP system. The following is a screenshot of the technical architecture of some DSP systems for your reference. It is also possible for non-technical students to have a perceptual understanding of this. I haven't done a big job.

1. Technical architecture summary

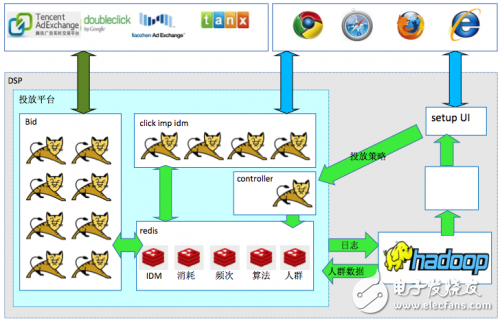

As shown in Figure 7-22, the DSP system involves the following aspects: the delivery platform, the placement user UI (setup UI), the report (Report), the algorithm engine, and so on. The algorithm engine module is mainly for large-data and algorithmic machine learning. A large number of distributed technologies (such as hadoop) are used to model user logs, crowd data, and machine intelligence processing. The algorithm engine module processes the crowd data, algorithm model and other data temporarily stored in the memory through mass memory technology (such as redis), which is convenient for the Bid delivery engine to quickly query and use. All of the temporary storage in memory is to complete the bidding process in 100ms. Make sure that the processing on the DSP side is <30ms, which is the time for network communication to flow out. The Bid Delivery Engine is a typical large cluster mode for responding to large concurrent requests and ensuring that each request is <30ms processed. The Bid delivery engine's delivery rules (budget, frequency, delivery policy settings, and more) are also available in memory for quick queries. The data content of the delivery policy settings is done by the user through the interface in the delivery settings user interaction module. There are also some important auxiliary modules, such as: advertising exposure click data recovery module, idmapping module, big data report module, built-in DMP module and so on.

Figure 7-22 Example of a technical architecture summary

2. Overview of DSP internal technical processing flow

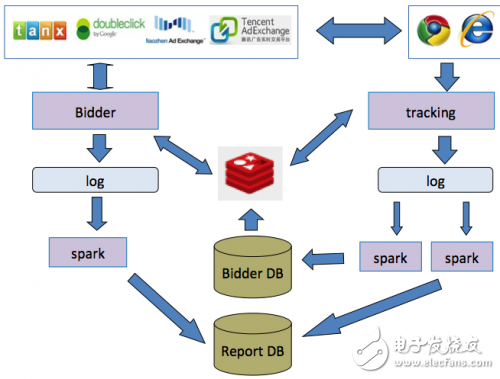

DSP internal technical processing mainly depends on some key technology processing facilities, including: original massive log system, massive message parallel processing queue (such as spark technology), massive memory system (such as redis technology), business system relational data database, etc. Wait. As shown in Figure 7-23, a technical processing line is an ad request processing line: the ad auction Bidder massive real-time ad request processing will generate a large amount of original log, while Bidder is also frequently associated with mass memory system interactive read and write advertising requests. Frequency, consumption, etc., and then the ad request log is processed into the report database and the corresponding big data population and model database through the parallel processing queue processing. Another technical processing line is the recycling of monitoring data such as advertisement exposure, click, etc., starting with a large amount of original log, and the data recovery engine interacts with the massive memory system to write data such as advertisement exposure, click-related frequency, consumption, and the like. Then the advertisement is exposed, and the log is processed through the parallel processing queue to be injected into the corresponding report database and the corresponding big data population and model database. At the same time, the parallel processing queue performs a large number of machine intelligence analysis to update part of the crowd data and model data, and simultaneously update to Bidder. Used in the database and content system for Bidder real-time bidding.

Figure 7-23 Example of the internal technical processing flow of the DSP

3. DSP bidding core processing flow summary (<30ms)

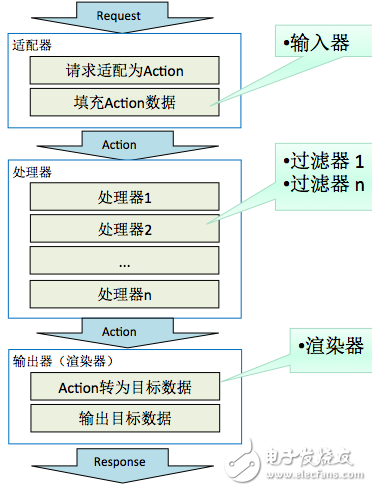

As shown in Figure 7-24, the DSP Bidder bidding module is designed to constrain the core processing time to be extremely short, <30ms. In order to solve different interfaces that adapt to different ADX traffic. When accepting ad requests and returning output, different adapters are used for different ADX platform interfaces using adapter design patterns. But the overall processing flow is unchanged. The intermediate business processing part also uses the filter design mode, and when adding new services, the filter implementation can be added according to the business needs. The advantage of this is that the overall Bidder bidding core module processing process framework is relatively stable and will not change with this business change. Strong business flexibility and horizontal scalability for high performance.

Figure 7-24 Example of DSP bidding core processing flow

4. Overview of the bidding process

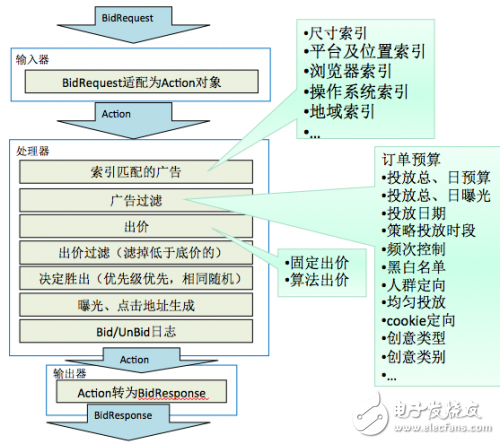

As shown in Figure 7-25, the Bidder bidding processor is also divided into: indexer fast filtering advertisements according to the business process. The advantage of using the indexer is that the retrieval efficiency is extremely high. Of course, the indexer can only use simple filtering conditions. For example: size index, platform and ad slot index, browser index, operating system index, geographic index, etc.). Ad Filtering (Delivery policy-related rules need to be calculated based on the inability to use the indexer, such as: budget, exposure, date, frequency, crowd targeting, creative type, etc.). The above two layers of filtering are used to filter the list of candidate advertisements that can be served for the advertisement request, and then the bidding algorithm is used to give the bids of the advertisements in the advertisement list (the dynamic bidding algorithm may be used here, and the fixed bid may be used) The bid strategy (what bid strategy is used and whether the algorithm is used is manually set in the delivery settings interface). Low-cost filtering is then performed (filtering those candidates whose bids are below the reserve price based on the reserve price in the ad request). The final sort and decision wins (according to the bidding of each candidate ad and the priority weighting given by the algorithm, the first win is to be returned with the ad content ready to bid). Exposure click dynamic code generation (the above-mentioned winning content generates an exposure click dynamic code, and the dynamic exposure click code has many purposes, such as anti-cheating, carrying parameter tracking throughout, etc.). Bid/Unbid logging (starts asynchronously when processing ends).

Figure 7-25 Example of the auction process summary

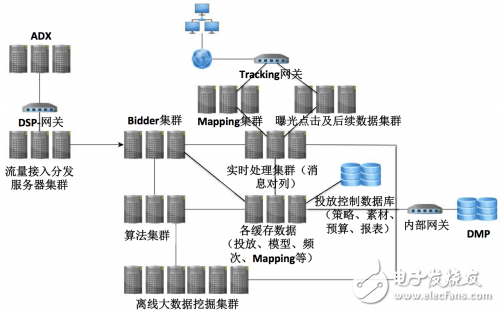

5. Distributed Cluster Overview

As shown in Figure 7-26, in order to cope with the massive advertising bidding business needs, and the need for big data distributed computing infrastructure. DSP needs to support distributed support level expansion in system architecture design. The architecture supports features such as large concurrency, big data, high availability, and high fault tolerance.

Figure 7-26 Example of a distributed cluster summary

- [Compact Design & Family-Sized:]Compact design frees valuable desk space while non-slip grip keeps it from being knocked off. 6 high powered ports lets you charge any combination of phones, tablets or other USB-charged devices simultaneously

Brand Name: OEM

Product Name: Multi USB Charger

Use: Mobile Phone

Place of Origin: Guangdong, China (Mainland)

Port: 6 port

Input: AC100-240V 50/60HZ

Size: 90*70*16mm

Weight: 250g

Materials: PC+ABS

Color: Black/White

Warranty: 1 year

Multi USB Charger,Multi Usb Wall Charger,Multiple Usb Port Charger,Multi Port USB Charger

Shenzhen Waweis Technology Co., Ltd. , https://www.szwaweischarger.com