[Application of AI in robot motion control] The motion control of complex robots has always prevented the development of the robot industry. It has not been well resolved. Even the Boston power that represents the highest level of robotics, its robot is far from practical. The rapid development of AI in the past two years has been used in a variety of places, like natural oils. Naturally, it also includes the field of robot control, and it seems to have achieved good results. In the front-end time, UCberkely's reinforcement learning expert Pieter Abbeel founded EmbodiedIntelligence, and the business directly covers the three hot spots of VR, AI, and robotics.

In order to understand how VR, AI and other new technologies can be used in the field of robot control, this article has simplified the application of VR and AI in robot control based on a number of related papers and publicly available materials, including Pieter Abbeel's speech, and found AI and VR, etc. There are still real applications in robot control, etc., but there is still a long way to go before substantial breakthroughs are made.

Several types of robot control

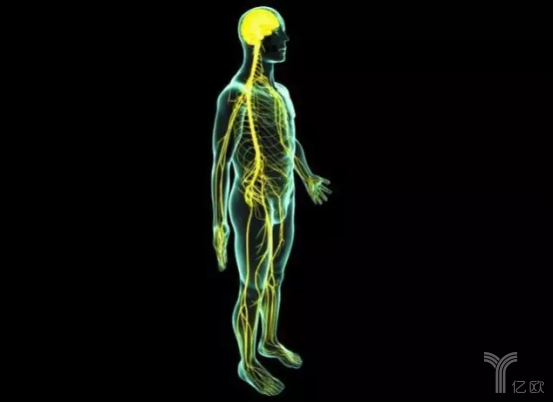

Many of the research goals of robots are simulating people's intelligence. Therefore, researching human control systems has great significance for robots. The human nervous system consists of the brain, cerebellum, brainstem, spinal cord, and neurons, and is complex and complete. The human nervous system includes the central nervous system and peripheral nervous system. The central nervous system consists of the brain and spinal cord and is the most important part of the human nervous system. The peripheral nervous system is a nerve distributed from the brain and spinal cord distributed throughout the body. Numerous neurons exist throughout the nervous system and constitute a neural network.

The central nervous system is responsible for motion control and is mainly divided into three layers:

Brain: At the highest level, responsible for the overall planning of the movement, the release of various tasks.

The cerebellum: living in the middle layer, responsible for the coordination of the organization and implementation of the movement. The balance of the human body is controlled by the cerebellum.

Brain stem and spinal cord: The lowest level, responsible for the execution of the movement, specifically controlling the movement of the muscle's skeleton, and is performed by the brain stem and spinal cord.

The three levels have different regulation effects on sports, from high to low, and low level receives high-level downlink control instructions and implement them specifically. The brain can directly or indirectly control spinal motor nerves through the brain stem.

If the robot is analogized to humans, the robotic arm controller is similar to the human spinal cord and is responsible for controlling the specific movement of the motor (muscle) and mechanical mechanism (bone). The motion controller of the multi-legged robot is similar to the human cerebellum. Responsible for controlling balance and coordination. The robot's operating system layer is similar to the human brain, perceives and recognizes the world, and releases various complex sports goals.

Based on the above analogy, referring to the current situation of various types of robots, the robot motion control can be roughly divided into four tasks:

Spinal Cord Control - Basic Control of Robotic Movement. The main problems faced by industrial robots, various types of robotic arms, and the underlying motion control of UAVs are such problems.

Cerebellar control - balance and motion coordination control of multi-footed robots. This piece is currently a difficult point in robot control and has not yet broken through. The best currently being done is obviously Boston Power.

Brain control - the perception of the environment. Primarily, the underlying robots such as sweeping robots and drones control the navigation and path planning of already packaged robots. Need to use environment awareness, positioning, navigation and motion planning for itself and goals.

Brain control - The cognition and interaction of the environment, that is, the robot specifically performs interactive tasks such as controlling the robot arm to grab objects and perform operations. This is an important issue that service robots need to break through.

Several specific control AI applications

1. Spinal cord control

Two typical applications of spinal control are robotic path planning and drone flight control. This type of problem belongs to the traditional automatic control theory. Based on mathematics and dynamics modeling, it has been developed for many years. It already has a very complete theoretical and practical foundation, and has also achieved very good results. Although deep learning is hot recently, it can theoretically be used for such control. However, there is no application in this type of basic control. The main reasons may be:

1) Industrial robots can repeat specific actions with high accuracy. Based on the automatic control theory, they can already be solved mathematically well, and because they understand the principle, they belong to the white box system. Since there is a reliable white box solution, there is no need to replace it with a black box neural network control system.

2) Industrial robots and other applications require high stability of control algorithms. As a neural network control system for the black box solution, the data cannot prove its stability. Once a problem occurs in the neural network controller, it is difficult to explain and improve.

3) The neural network algorithm is based on a large amount of data training. However, in existing motion control, such as flight control, the cost of obtaining actual experimental data is high, and it is very difficult to obtain a large amount of data.

2. Cerebellar control class

The typical problem of cerebellar control is the balance and motion coordination control problem of humanoid bipedal and multipod robots. This aspect has been studied on the basis of traditional control theory. However, it is much more difficult for the freedom of movement compared to the robotic arm or drone. Bipedal robots give most people the impression that they are slow, stiff, and unstable. Boston Power’s Altas, Big Dog, etc. are the most advanced in this area. Boston Dynamics has not announced the technology they use, but Google Engineer EricJang said that according to the information from the speech, BD’s robot control strategy is based on The controller of the model does not involve neural network related algorithms.

3. Environmental Perception

The main scenarios are the path planning of the service robot, the target tracking of the drone, and the visual positioning of the industrial robot. By sensing the environment, the target motion instruction is issued to the packaged motion control system.

Target Recognition

Target recognition in the process of environmental perception, such as the identification and tracking of drone targets, has the help of neural networks, and can be more accurately identified, and has been applied to drones such as Dajiang.

Positioning navigation and path planning

At present, the positioning and navigation of robots is mainly based on the popular vSLAM or laser radar SLAM technology. The mainstream laser radar program can be divided into three steps. The middle part may involve some deep learning. Most of the content does not involve deep learning.

The first step: SLAM, build a scene map, build a 2D or 3D point cloud of the scene with a laser radar, or reconstruct a 3D scene.

Step 2: Construct a semantic map. Objects may be identified and segmented to mark objects in the scene. (Some may skip this step)

Part III: Path planning based on algorithms and driving the movement of the robot.

4. Environmental interaction

Typical application scenario: Robot arm grabs target objects. The interaction with the environment has always been a difficult problem to solve in traditional automatic control. In recent years, on the basis of reinforcement learning, AI-related technologies have been used in such issues and have achieved certain research progress. However, it is still a major controversy whether it is the mainstream direction in the future.

1) Reinforcement learning

In the reinforcement learning framework, an agent that includes a neural network is responsible for making decisions. Agent takes the environment collected by the current robot sensor as input, and outputs the action command action that controls the robot. After the robot acts, it observes the new environmental state and the result of the action Reward, and decides the next action action. Reward is set according to the control target and has positive and negative points. For example, if you are aiming for autopilot, the forward Reward is to reach the destination, and the reverse is to fail to reach the destination. The worse Reward is an accident. Then repeat this process with the goal of maximizing Reward.

The control process of reinforcement learning is originally a positive feedback control process and is the basis of AI for robot control. Based on this, reinforcement learning has produced some research results in robot control.

2) Finding the target in the environment

In 16 years, Li Feifei's group released a paper based on deep reinforcement learning. When the target image is used as the input, no map is built to find something. The general idea is: According to the map seen by the machine, decide how to go, and then look at the map, and then decide the new step, until you find something. The paper takes the target image as input, and the trained neural network has universality.

This way to find things closer to human thinking. The trained controller does not remember the position of the object, and it does not know the structure of the house. But it remembers where to go to each object in each position.

3) Robot grab

Traditional robotics studies believe that it is necessary to clearly understand the three-dimensional geometry of the object to be grasped, analyze the position and force of the force, and then invert the direction of the robotic hand to these positions. However, it is difficult to grasp irregular shapes and flexible objects in this way. For example, a towel may need to be viewed as a series of rigid links and then subjected to dynamic modeling analysis, but the calculation amount is relatively large. However, rubber such as small yellow duck cannot be seen from the outside and it is difficult to calculate the correct force to be applied.

Pieter Abbeel, DeepMind, and OpenAI's research on robot control is based on this deep reinforcement learning. Based on reinforcement learning, the robot grabs, the image seen from the machine's perspective is input, and the machine finally grasps the object as a goal, and continuously trains the machine so as to implement the model without modeling and force analysis. Grab the object. Pieter Abbeel has shown robots stacking towels, opening caps, loading toys and other complex actions.

However, there are still many problems based on reinforcement learning, such as low efficiency, long reasoning process, difficult to describe the task, can not be lifelong learning, can not get information from the real world. Some of them have improved through the introduction of meta learning, one-shot learning, transfer learning, VR teaching, and others are still difficult to solve.

5.DexterityNetwork

Given the various issues of deep reinforcement learning, Pieter Abbeel’s colleague Ken Goldberg of UC Berkeley adopted a research idea called DexterityNetwork (Dex-Net). First, through the analysis of force and modeling in traditional robotics, a data set containing a large amount of data is created. Each data in this data set contains a model of an object and the object can be grasped steadily in different postures. The way of applying force, these methods of exertion are calculated by the object model. With the data, use this data to train a neural network. Then a new object is given. The neural network determines which object in the data set is the most similar to the object, and then calculates the most stable force of the new object according to the force pattern contained in the most similar object's data set.

An important drawback of KenGoldberg's plan is that the amount of computation is too large. The entire algorithm takes up 1,500 virtual machine calculations on Google Cloud servers. This method also gives the concept of "cloud robots" attention.

At present, the two methods of Pieter Abbeel and Ken Goldberg are still at the stage of academic controversy. New research results are still emerging, and there are still many problems that have not been solved. In particular, stability and robustness are the focal points of all parties. Different from the voice recognition speaker, it is nothing more than a joke. The stability and reliability of the robot system are very high. Once the system is wrong, it will destroy the object, and it will cause human life. Pieter Abbeel also acknowledged that the robustness and stability issues have not yet been considered and it seems that the overall level has not yet reached the commercial product level.

to sum up

In general, AI has made some progress in the field of robot control in the past two years in terms of reinforcement learning. In particular, progress has been made in environmental interaction problems that were difficult to break through in the past. However, the control system based on the neural network seems to be difficult to solve in the short-term in terms of robustness and so on, so there is still a long way from the actual application. With the joint efforts of various research methods, we also hope that the robot control problem can be broken at an early date.

Posh Plus,Posh Plus Xl Pod Vape,Posh Plus Disposable Vape,Posh Plus Disposable Pod Device

Shenzhen Zpal Technology Co.,Ltd , https://www.zpal-vape.com