Can real-time ray tracing technology appear on current next-generation hosts? I have been working on various real-time and offline renderings for many years. My opinion is that the next generation of consoles is really impossible. In the next 3-5 years, some non-global illumination algorithms based on ray tracing, such as specular reflection, may be used to compensate or enhance the defects of existing algorithms. The true popularity of ray tracing will be a long process, working together for the entire industry. As for true unbiased global illumination, the amount of computation required to do it in real time is still too large for the foreseeable future.

When it comes to ray tracing, many people's first reaction is the holy grail of graphics rendering. The picture of the game will be as shocking as the Hollywood blockbuster. This is actually a very impractical subjective assumption.

Mistake 1: Tracking ray is equivalent to global illumination, and even a series of special effects such as caustics, clouds, subsurface scattering, based on physical coloring.

In fact, ray tracing simply refers to the process of calculating the focus in a given ray and a group of triangles in three dimensions. This is a logically very simple operation. Based on this operation, we can derive many algorithms for global illumination. The essential reason why this operation is so useful is because of the rendering equation

![]()

Is the integral on the hemisphere around the surface normal. Anything in the direction of the hemisphere, whether it is a light source or a variety of strange shapes, other things in the material will have an effect on the color of the surface. In the case of not knowing what to do in this hemisphere direction, it is the most common, but inefficient, method of emitting light to the surrounding, as shown below. However, ray tracing itself can be used in collision detection, path finding, etc., irrespective of rendering, in addition to sampling scenes.

Misunderstanding 2: I think that the ray tracing game screen is filmed, and I think that the rasterization-based method is not good for lighting calculation and needs to be overturned.

This is simply ignoring the various techniques, techniques and optimizations that the graphics community and game developers have accumulated over the past 20 years. Let me give two examples first. The image below is an architectural visualization made with Unreal Engine 4. For this static scene, the Light Map makes the most difficult part of the diffuse reflection through pre-computation, and there is no overhead at runtime. UE4 uses Photon Mapping to calculate Light Map, which is a consistent global illumination algorithm. That is to say, the final result plus SSR and Probes for the mirror part is basically the same as your offline rendering result. The small scene 60Fps runs without pressure. .

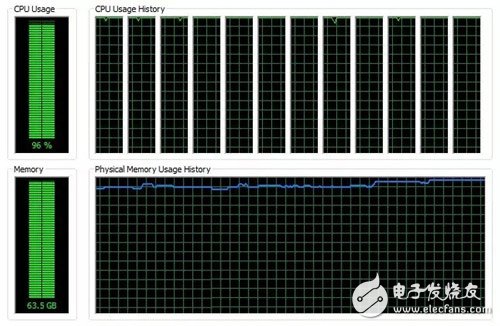

Another example is Unreal's kite demo. The terrain it uses is Epic. The terrain used in New West is Epic's field scan in New Zealand. The goal is probably to create a Pixar-type cinematic experience. I can't say that this demo is exactly as accurate as the above one. The goal is probably to create a Pixar-type movie-like experience. I can't say that this demo is exactly as accurate as the above one. The goal is probably to create a Pixar-type movie-like experience. I can't say that this demo has the exact CPU above. It can't run 60Fps. The task manager is full of all kinds.

So what I want to say is that the unbiased global illumination seen visually is an important part of the experience, but raster-based programs can also provide this experience in certain situations, and lighting is not the only gap with the movie. The huge gap between the available resources of movies and games and the essential gap between the two media experiences are obstacles to the filming of game graphics! Resource gaps include time resources for one frame of image calculation (1/60 seconds and hours), computing resources (general CPU + GPU and a render farm), art assets (hundreds of thousands of triangles and excessive subdivision to specific pixels) There are still many triangles) and the precision of physical simulation and so on. The essential difference in experience is that the film is linear. The director, the effect artist only needs to ensure that all the pictures are at an angle and one time is perfect. The game is an interactive way. So ray tracing is not the ultimate lifeline. A real-time program is one that allocates its precious time and hardware and software resources reasonably to different factors.

Of course, I am not conservative. As a basic sampling scenario, ray tracing is versatile and intuitive. If performance is followed, it will inevitably lead to the development of many rendering technologies. However, the difficulty of efficient implementation is very large. Many of the previous answers have analyzed some reasons. I will summarize and add.

Difficulties 1: The amount of calculation is large.

If you want to use a 4K resolution game to do global illumination algorithms, such as path tracking, assume that each pixel requires 1000 sample noise to converge (this is a very, very conservative number), and the path length of each sample is 5 reflections ( It is also very short 1000 sample noise to converge (this is a very very conservative number), the path length of each sample is 5 reflections (also very short 2000000 * 60 = 2488320000000. That is to say 2500G in one second) With light, this number is probably at least 10,000 times slower than the current fastest renderer. The irony is that for film-quality imagery, the process of intersection is only a very small part compared to coloring. The cutting edge of offline rendering I have also learned and implemented a lot of research, there are many ways to improve the sampling efficiency. But no matter what part. The cutting-edge research of offline rendering I also understand and implement a lot, there are many ways to improve the sampling efficiency. How this number of practices really has no hope in real-time applications. It’s too harsh to blame the 1/60 second requirement. But I don’t want to be overly sloppy. Pessimistic, the above 10,000 times gap is just a real need to do an unbiased path tracking. In fact, even offline rendering has many tricks that can reduce noise, biased to speed up the convergence, so if you really apply it to the game, you can use it. Some other biased methods go to cheat away for such a large amount of computation.

Difficulties 2: Modern GPUs have optimized the performance of raster-based pipelines to the extreme.

The final performance is squeezed out. On the one hand, the rasterization algorithm is easier to integrate into the hardware than the ray tracing. The triangle can be streamed in different pipeline stages. On the other hand, the rasterization has been popular for 20 years, and the manufacturers already have too More time and experience accumulate the tried and tested optimization. Every time NV goes deeper into some GPU architectures, I will be sighed by the fact that there are so many hardware optimizations at the bottom. At the same time, the raster-based rendering algorithm also has a GPU architecture that sighs that there are so many hardware optimizations at the bottom. At the same time, the raster-based rendering algorithm also has to be developed from scratch, of course, there must be something new, but it really needs people to do the work.

Difficulties 3: Modern raster-based graphics pipelines have been branded into the GPU, interfaces are exposed in the API, and a large number of off-the-shelf algorithms are implemented in the game engine and in the minds of game developers.

So the addition of ray tracing means that the graphics pipeline changes, whether it is a new engine and the minds of game developers. So the addition of ray tracing means that the graphics pipeline changes, whether it is a new engine and the minds of game developers. So the addition of ray tracing means that the graphics pipeline changes, does it mean that there is a new type of ray emission, or does it run a lot of ray like a compute shader and then return it in batches? The acceleration structure of the scene is to provide support at the API Level. Or give it to the developer? In the case that the performance can't keep up with the global algorithm, what other effects and algorithms can we try? When these things are kept up, it will take a long time for the games on the market to gradually become popular. So I think that even after performance is up, the popularity of ray tracing will be a slow and gradual process, and the entire industry needs to work together.

Curved Lcd Display,Curved Display,Ultra Wide-Color Curved Lcd Monitor,Curved Screen

Shenzhen Risingstar Outdoor High Light LCD Co., Ltd , https://www.risingstarlcd.com